Original blog post: https://www.pluribusnetworks.com/blog/understanding-pluribus-networks-at-vaporios-kinetic-edge/

Today we announced the successful deployment of Netvisor® ONE OS and the Adaptive Cloud Fabric™ 5.0 at multiple Vapor IO Kinetic Edge™ sites in Chicago, IL. This deployment validates Pluribus’ ability to connect multiple edge data center sites seamlessly, providing a controllerless distributed fabric with SDN automation and deep network slicing to support multitenancy and multiple edge applications.

What Is the Kinetic Edge?

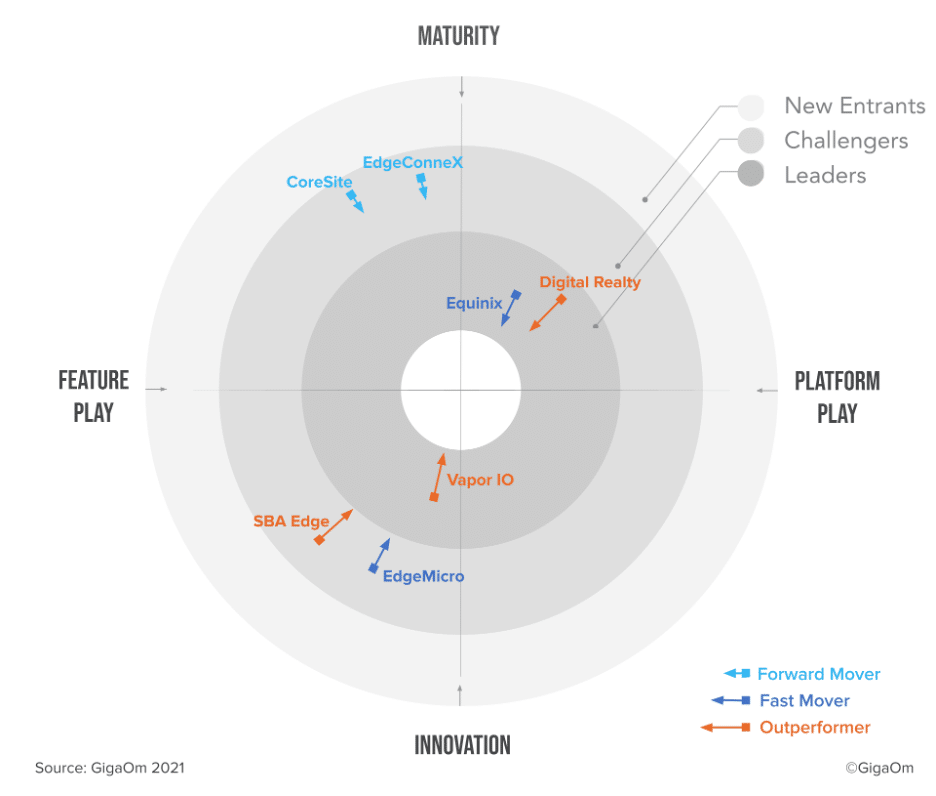

Vapor IO’s Kinetic Edge is a new kind of infrastructure architecture that uses software and high-speed connectivity to combine multiple micro data center facilities into a single logical data center. For developers and operators, it presents a geographically dispersed collection of micro data centers as a single virtual facility with multiple availability zones. Vapor IO has plans to deploy Kinetic Edge colocation infrastructure in over 80 cities across North America, in close proximity to 4G and 5G base stations. This plan is mapped out with their partner Crown Castle, which owns over 40,000 cell towers and rich fiber connectivity throughout the United States. Each city will have approximately six micro data center environments, enabling customers of Vapor IO to place compute and storage extremely close to users and things to support a new class of applications with requirements that cannot be met by traditional centralized cloud architectures. If you would like to read more about the drivers of edge compute, read my earlier blog, Pluribus Adaptive Cloud Fabric: Now Optimized for Distributed Cloud. All of these data center sites are networked together over a private fiber network, and each site has direct access to local meet-me rooms in carrier-neutral hotels as well as the internet.

Vapor IO’s Kinetic Edge

Pluribus is a founding member of the Kinetic Edge Alliance, a coalition of leading companies bringing end-to-end edge infrastructure solutions to customers. Our goal is to help foster an open community of companies that can deliver end-to-end edge solutions to our mutual customers, and that’s why this first deployment of the Adaptive Cloud Fabric in Chicago included not only Vapor IO but additional KEA partners Packet and MobiledgeX.

How Does the Adaptive Cloud Fabric Fit

One might wonder why it would make sense to deploy Pluribus in an environment that already has a robust fiber network. Based on numerous customer discussions, it is clear that it is going to be common in the world of edge compute to have a scenario where a data center operator may have some compute and storage located in a Kinetic Edge location but also have compute and storage on prem, in other colo facilities, on the factory floor or out at a remote site. In the case of the Kinetic Edge SDN network, it provides connectivity from each micro data center to other micro data centers, carrier-neutral hotel facilities and the internet. Pluribus rides over that network and is able to extend our SDN-controlled fabric that reaches all the way down to the customer’s top of rack (TOR) switch.

Pluribus offers the Adaptive Cloud Fabric that runs on top of open networking TOR switches to reach deeply into the data center and deliver data center unification across multiple sites, even if widely geographically distributed. The fabric offers distributed SDN control (sometimes called “controllerless”) at the management layer, where there is no need to deploy a costly controller that adds single points of failure, complexity and latency in distributed environments. Every switch in the fabric hosts a micro SDN controller holding a full view of the state and configuration of the entire fabric, as well as a database of all endpoints (aka vPorts) connected to the fabric. With this highly distributed approach, the entire fabric can be accessed and controlled from any switch in the fabric via a CLI or a single REST API call. For example, add a VLAN or an ACL to any switch and it will automatically be populated to all switches in the fabric. If one node cannot implement the configuration change, an alert is raised and the change is rolled back across all switches until the issue can be resolved, ensuring consistent synchronization of config and policy and virtually eliminating the potential for human error.

A mesh of VXLAN tunnels is automatically created with all virtual tunnel endpoints (VTEPs) terminated and accelerated in hardware on the switch, eliminating the need for compute nodes to consume processor cycles for networking. Additionally, this creates a fabric overlay that is agnostic to the compute virtualization layer, avoiding lock-in by vendors like VMware with their NSX offering, which is tightly coupled to ESXi virtualization. Instead, one can now deploy multiple technologies such as VMware, OpenStack, Kubernetes, etc., for virtualization and orchestration. Ultimately, once deployed, the Adaptive Cloud Fabric offers the customer a single logical programmable fabric that will operate over multiple underlays, including the Kinetic Edge underlay. Thus, the data center operators, be they the enterprise or a service provider, will have a single, consistent programmable fabric even when deployed in multiple colo facilities with multiple underlays.

The VXLAN overlay provides an abstraction and virtualization of the underlay networks – once the fabric is deployed, the heterogeneous networks connecting all of the compute infrastructure now appear as a homogeneous virtualized abstraction. The fabric offers a rich set of Layer 1 (VirtualWire), Layer 2 and Layer 3 network services and deep slicing across the data, control, and management planes for multitenancy and application isolation. Leveraging distributed IPv4/IPv6 routing with anycast gateways, a single IP is used to control each slice, and virtual machines or containers can be moved to any compute location without having to reconfigure any IP addresses. Each slice is called a VNET and runs across the entire fabric, while additional hierarchical isolation can be created within each slice for east-west traffic by deploying distributed VRFs (the fabric supports up to 2000 VRFs per fabric). The fabric also offers rich telemetry with visibility for every port in the fabric, every device connected to each port and every TCP flow for performance monitoring and rapid troubleshooting.

Finally, because the Pluribus implementation does not affect the way the TOR switch operates at the underlay level, standard L2 and L3 protocols can be used to easily insert into brownfield environments. This is not the case with controller-based implementations that require a greenfield deployment (or full rip and replace), where all devices in the fabric must be SDN-enabled with said vendor’s particular SDN protocols.

What Are the Details of the Deployment?

Pluribus deployed across three Kinetic Edge colocation sites, with each site hosting a different white box switch, including a Dell EMC switch, an Edgecore switch and a Pluribus Freedom series switch. On each switch we deployed the Netvisor ONE OS Release 5.0 and activated the Adaptive Cloud Fabric software. Each switch was connected to a bare metal server from Packet and also to a third-party spine switch using standard networking protocols, which in turn connected into a third-party router providing ingress/egress at each Kinetic Edge site. Each router connected over dark fiber to routers installed in the other two colocation sites—the configuration was replicated at each site.

Pluribus created a fabric across the three sites with two VNET slices. One slice was configured to connect to a nearby wireless radio that was directly connected to the Kinetic Edge network—this was the low-latency slice. The second slice was configured so that all traffic would travel out across the internet and then back to the same wireless radio, simulating processing happening in a centralized (non-edge) cloud environment—this was the regular latency slice. MobiledgeX then deployed a facial recognition application onto the Packet bare metal servers that in turn would connect in a client-server fashion with the client running on an Android phone, which connected over the wireless network. The facial recognition app has a utility that can provide a latency measurement on how long it takes to recognize a new face. The results verified that the low-latency slice leveraging edge compute provided much lower latency for this application.

John Welch from Vapor IO runs the facial recognition application in the Edens Chicago Kinetic Edge site. The red text shows the regular latency slice and the green text shows the low-latency edge slice.

The test showed that the entire system worked and proved out the latency advantages of an edge compute architecture. The network latency for the cloud-based slice came in at 82 milliseconds, while the low-latency edge slice came in at 13 ms, over six times better. The power of edge compute for a new class of applications is clear and necessary.

Summary

A new class of applications is emerging that cannot be satisfied by centralized data center architectures. This includes applications like factory automation, video surveillance, energy production monitoring, public safety, autonomous vehicles, AR/VR, smart cities and many more. These applications demand lower latency, data thinning, autonomy and privacy that must be delivered by deploying compute and storage closer to users and things—at the edge. This explosion of mini and micro data centers deployed in remote and often lights-out data center locations presents a number of challenges, not the least of which is networking. The Kinetic Edge Alliance is working together to solve these problems and provide end-to-end distributed cloud solutions for enterprises, managed service providers, regional cloud service providers and communication service providers. This deployment demonstrates how multiple KEA partners can come together to deliver an end-to-end solution to help customers deploy new, low-latency revenue-generating applications.